Deep Learning Lab Course Project

During Deep learning lab course we studied and implemented code for mainstream deep learning tasks such as, spatial transformer, super-resolution, object detection, semantic segmentation, Grad-CAM, GAN/CycleGAN, RNN translator.

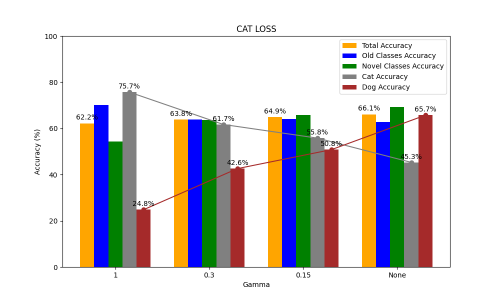

For final project, we used knowledge distillation technique to develop learning without forgetting with our novel idea. Learning without forgetting is about training a model that has been trained on old classes with only new class images while maintaining high performance for both old and new classes. I came up with ideas to balance the performance of old class ‘dog’ and new class ‘cat’ since there performance seemed highly correlated. In addition, I came up with novel ‘max feature loss’ in order to maintain the old task performance.